This paper argues against the viability of the simulation hypothesis, when taken literally, as a reality theory. However, in so far as perception can be considered a mental function that generates a representation of reality, it can be considered a simulation. Moreover, since reality is, by definition, the set of everything that is real, it necessarily follows that reality therefore simulates itself. To that extent, we can form a viable application of the simulation hypothesis as an aspect of a more comprehensive reality theory. We specifically leverage the mathematical structure of simulations, along with theories such as the Interface Theory of Perception, the Fitness-Beats-Truth Theorem, and emergent complexity theory to argue that reality is a self-simulation, with perception as a key simulation function for conscious agents acting within reality, as reality.

Defining “simulation”

Simulation refers to the process of creating a model of a real-world system or process and then executing that model on a computer or other device to analyze the system’s behavior. In computer science, simulation is a crucial tool for analyzing the behavior of complex systems and for testing new algorithms and programs before they are deployed in the real world (Kelton, Sadowski, & Sadowski, 2018).

In virtual worlds, simulation is used to create digital environments that can replicate the behavior of real-world or imagined worlds. These environments can be used for a variety of purposes, such as entertainment, education, training, or scientific research. For example, flight simulators are commonly used to train pilots, while virtual reality environments are used for immersive experiences in games or educational settings (Kopper, Hauber, & Lemke, 2011).

Simulation technology has advanced significantly in recent years, allowing for more complex and realistic simulations to be created. This has led to new applications of simulation technology in areas such as autonomous vehicles, robotics, and artificial intelligence, where simulations can be used to test and refine algorithms in a safe and controlled environment (Golodoniuc, Gao, & Sorensen, 2021).

Simulations are often described in terms of their relationship to reality and the different levels of reality they can represent. At the most basic level, simulations can be thought of as representing a simplified or abstracted version of reality, while at higher levels of fidelity, they can become increasingly complex and detailed, approaching a level of realism that is difficult to distinguish from reality itself (Kelton, Sadowski, & Sadowski, 2018).

In some cases, simulations can represent a level of reality that is completely distinct from the physical world. For example, virtual reality environments can be created that simulate entirely imaginary or impossible worlds, such as a science fiction universe or a fantasy realm.

At the other end of the spectrum, simulations can represent a level of reality that is almost indistinguishable from the physical world. For example, simulations can be created to replicate the behavior of complex systems such as traffic flow or weather patterns, and these simulations can provide highly accurate predictions of real-world behavior (Kelton, Sadowski, & Sadowski, 2018).

One important concept in understanding the relationship between simulations and reality is the idea of “ontological levels.” This refers to the different levels of abstraction or granularity at which a system or phenomenon can be described. For example, the behavior of a complex system like an airplane can be described at different ontological levels, such as the mechanical properties of the airplane’s components, the aerodynamic properties of the wings, or the behavior of the airplane as a whole (Rosenberg, 2006).

The simulation hypothesis vs. argument

The simulation hypothesis suggests that our reality is actually a computer simulation created by a highly advanced civilization. This hypothesis has gained popularity in recent years due to the advancement of computer technology and the potential for artificial intelligence to create realistic simulations of reality (Bostrom, 2003).

The simulation argument, proposed by philosopher Nick Bostrom, builds upon the simulation hypothesis by suggesting that if it is possible to create a realistic simulation of reality, then it is likely that we are living in such a simulation. This argument is based on the assumption that civilizations capable of creating advanced simulations will likely create many such simulations, and that it is more likely we are living in a simulated reality than in the “real” reality (Bostrom, 2003).

While the simulation hypothesis and the simulation argument are related, they are not identical. The simulation hypothesis is simply the idea that our reality is a simulation, while the simulation argument builds upon this idea to argue that it is probable we are living in a simulation.

There are several reasons why the simulation hypothesis and the simulation argument could be right. For example, the rapid advancement of computer technology and the potential for artificial intelligence to create highly realistic simulations suggests that it is becoming increasingly feasible to create simulations of reality. Additionally, if it is possible to create one simulation, it is plausible that many such simulations would be created, including simulations of historical periods or even entire universes.

However, there are also several reasons why the simulation hypothesis and the simulation argument could be wrong. For one, the creation of a highly advanced civilization capable of building realistic simulations is itself a highly speculative proposition. Furthermore, even if it is possible to create such a civilization, it is unclear why they would build simulations of reality, or why they would choose to simulate our specific reality.

What about evidence for or against the hypothesis? In his book Reality+, philosopher David Chalmers discusses the simulation hypothesis and concludes that it is difficult to determine whether or not reality is a simulation. He notes that while there is currently no empirical evidence to support the idea that we are living in a simulation, it is also difficult to rule out the possibility entirely, because a high-fidelity simulation may not provide any evidence of its own existence, yet still exist (Chalmers, 2019).

Further complicating the issue is that certain forms of our logic might allow for contradictions to be part of reality. For instance, Bernardo Kastrup and Graham Priest both argue that the nature of reality and logic may entail contradictions and absurdities, challenging traditional assumptions about the nature of truth and rationality.

Kastrup argues that reality itself may be fundamentally contradictory, in the sense that it may be simultaneously composed of both objective and subjective elements. He suggests that traditional metaphysical frameworks, which assume a strict divide between subjective experience and objective reality, may be unable to fully account for the nature of reality as we experience it (Kastrup, 2018).

Priest, on the other hand, argues that logic itself may be subject to contradictions and paradoxes, and that this should not be seen as a limitation of the discipline, but rather as an inherent feature of reality itself. He suggests that the existence of contradictions in logic may be evidence of the fundamentally paradoxical nature of the universe, and that attempts to resolve these contradictions may ultimately be futile (Priest, 2006).

Both Kastrup and Priest’s arguments challenge traditional assumptions about the nature of reality and logic, suggesting that our understanding of these concepts may be more complex and multifaceted than previously thought. If they’re right, then the sacred correspondence theory of truth and principle of bivalence, on which realism depends (and which depend on realism, in turn), are void. In that case, then a simulated reality may actually provide us occasional evidence of its existence in the form of absurd happenings, as Kastrup catalogs in Meaning in Absurdity (Kastrup, 2012).

Chalmers further acknowledges that the simulation hypothesis raises a number of difficult philosophical questions, such as the nature of consciousness and the relationship between the simulated reality and the “real” reality that might exist outside of the simulation. However, he ultimately concludes that it is impossible to know for sure whether or not reality is a simulation, and that the question may ultimately be beyond the reach of human knowledge (Chalmers, 2019).

Does the simulation hypothesis really tell us anything?

However, the idea that reality is a simulation created by an advanced civilization encounters numerous logical and philosophical problems that negate its validity, at least as a meaningful reality theory, upon closer inspection.

Reality is the set of everything that exists, such that there is nothing real that is external to reality. Everything that is real is within reality. If two things have a real difference between each other, then they (and that difference) still share the similarity of being within reality. To that extent, everything within reality is similar in spite of any other real difference.

The difference relation between two real, different things necessarily exists within the medium of reality. In that way, reality is at base a single medium of potential, from which difference relationships are actualized, and “things” are realized. Therefore, no difference between two real things is absolute. The very fact that we can discuss their difference relationship in language, which has a structure (“rule set”) that maps onto reality, tells us that they share an ontological medium, of which any “thing” is an excitation.

A handy metaphor is that of ocean waves. The still, calm, base surface of the water is homogeneous. It is a single medium, whose excitations evolve according to a natural “rule set” (determined by factors like wind, currents, temperatures, etc.) to form waves. Each wave appears different from each other wave, and we can even measure their dynamics to find real differences between them. Those differences can be described using languages (perceptual, cognitive, natural, and formal) that map onto and correspond with the structure of the reality they describe. However, no individual wave and no difference between waves in a given set of waves exist independently of their medium, the ocean.

Therefore, the simulation hypothesis really tells us little about the origins of reality or its ultimate nature. If our universe exists on the hard drive of some advanced civilization, then reality includes both our universe and theirs, and we’re left to explain reality from their universe’s perspective. They, of course, would run into the same simulation hypothesis that we have, and so on and so on. We’d meet an infinite regress, a sign that our thinking is off somewhere.

It doesn’t matter how many different simulated universes are in question: by virtue of the fact that they are real, they are all the same in that they belong to reality, the set of everything that is real. As such, we must have a reality theory that terminates at one entity.

However, this does not negate the possibility that reality can be described as a kind of “simulation” in a certain, non-trivial sense. Indeed, that sense, which we’ll now explore, may also explain why there seem to be degrees of plausibility and intuitiveness to the simulation hypothesis, and even more so to the simulation argument.

Mathematical structure of simulations

A simulation is a mathematical model that imitates a real-world system’s behavior or operations. It is composed of four main components: input, processor, output, and display. These components work together to create a mathematical representation of the system being modeled.

The mathematical structure of a simulation can be represented by the following equation:

Output = f(Input, Processor)

where the input represents the initial conditions and parameters of the simulation, the processor represents the rules and algorithms used to simulate the behavior of the system being modeled, and the output represents the simulated behavior of the system over time.

The input is a set of values that represent the system’s initial conditions, including its state, position, velocity, and other relevant parameters. The input is usually supplied by the user, and it is the starting point for the simulation. The input can be in the form of data, such as tables or graphs, or it can be in the form of equations or mathematical models.

The processor is the core of the simulation, and it is responsible for simulating the system’s behavior. The processor is composed of a set of algorithms and rules that determine how the system will evolve over time. The processor takes the input data and applies it to the system’s behavior model to create the output. The processor can be a set of equations or a software program, depending on the complexity of the system being modeled.

The output, presented on the display, is the result of the simulation, and it represents the system’s behavior over time. The output can be in the form of data, such as tables or graphs, or it can be in the form of visual representations, such as animations or videos. Of course, it can also be a high-fidelity virtual reality simulation. The output is the user’s primary means of interpreting and analyzing the simulation’s results.

The four components of the simulation equation work together to create a mathematical model of the system being modeled. The input provides the initial conditions and parameters, while the processor applies the system’s behavior model to create the output. The output represents the system’s behavior over time, while the display presents the output to the user in a visual or other format (Barros & Verdejo, 2018; Fishwick, 2018; Law & Kelton, 2018).

A more complicated equation for the mathematical structure of a simulation can be written as:

y(t+1) = f(x(t), u(t), θ)

where y(t+1) is the output variable at time t+1, which is a function of the input variables x(t), u(t), and the system parameters θ.

The input variables x(t) represent the state variables of the system at time t, such as position, velocity, temperature, and pressure. These variables can be continuous or discrete and can represent physical quantities or abstract concepts. The input variables are usually measured or estimated from real-world data, and they can be modeled using differential equations, difference equations, or other mathematical models.

The control variables u(t) represent the inputs to the system at time t, such as forces, torques, voltages, or currents. These variables are usually manipulated by the user or by an external controller to achieve a desired system response. The control variables can also be modeled using differential equations, difference equations, or other mathematical models.

The system parameters θ represent the unknown or uncertain characteristics of the system, such as the friction coefficient, the mass of an object, or the environmental conditions. These parameters are usually estimated from real-world data or from experimental measurements, and they can be modeled using probability distributions, optimization techniques, or other mathematical models.

The function f represents the system model or the simulation algorithm, which maps the input variables x(t), u(t), and θ to the output variable y(t+1) at time t+1. The function f can be a deterministic or stochastic model, a linear or nonlinear model, a time-invariant or time-varying model, or a discrete or continuous model. The function f can also be implemented using different numerical methods, such as finite difference, finite element, or Monte Carlo simulation.

The simulation equation can be solved using numerical integration methods, such as Euler’s method, Runge-Kutta method, or Adams-Bashforth method, to obtain the output variables at each time step. The simulation results can be analyzed and visualized using various tools, such as graphs, plots, animations, statistical tests, and even virtual realities.

In other words, the mathematical structure of a simulation equation involves the input variables x(t), the control variables u(t), the system parameters θ, the system model or simulation algorithm f, and the output variable y(t+1) (Barros & Verdejo, 2018; Fishwick, 2018; Law & Kelton, 2018).

Now, let’s apply this technical knowledge to the study of reality.

Language, perception, and reality

The intelligibility of reality is a necessary condition for our ability to perceive and make sense of the world around us. Our ability to perceive and interpret reality is dependent on our ability to use language and communicate with others.

Language is a tool for organizing and making sense of the perceptual data that we receive from the world around us. By using language, we are able to categorize and label objects and events in the world, which allows us to form more complex concepts and understandings of our environment.

The intelligibility of reality is not just a feature of human perception, but is a necessary condition of reality itself for any form of perception or cognition by embodied conscious agents, who are part of reality, to be possible.

Reality, language, cognition, and perception are therefore inextricably linked, and the intelligibility of reality is a necessary condition for our ability to perceive and make sense of the world around us. Without an isomorphism between reality, perception, cognition, and natural and formal languages, we would be unable to survive, let alone theorize.

In other words, the structure of reality can be considered a kind of syntax and a language all its own, which explains how and why we are able to find reality intelligible through our perception and cognition (which are also linguistic), and thus through our natural and formal languages (Santos, 2023).

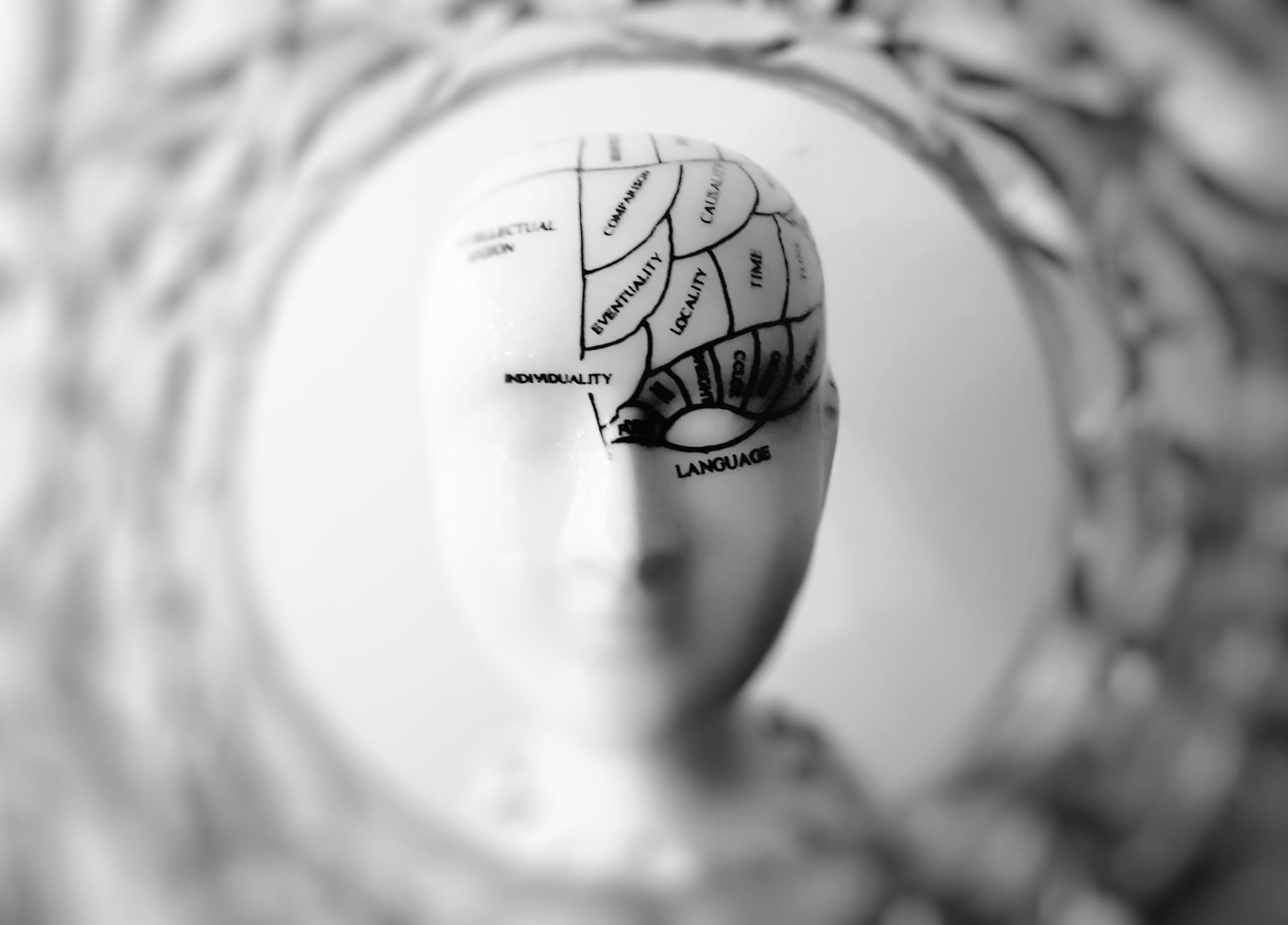

The Interface Theory of Perception

The Interface Theory of Perception, proposed by cognitive scientist Donald Hoffman, suggests that our perceptions are not a direct reflection of the physical world around us, but rather are shaped by a set of evolved interfaces that serve to simplify and streamline the complex information present in our environment (Hoffman, Singh, & Prakash, 2015). According to this theory, the objects and events that we perceive are not “real” in the sense of being objective, external entities, but are rather the result of a complex mental process of data compression and filtering.

The Fitness-Beats-Truth Theorem, proposed by philosopher Kevin Scharp, suggests that evolutionary pressures may favor false beliefs over true ones, so long as those false beliefs help individuals to survive and reproduce more effectively than true ones (Scharp, 2018). According to this theorem, there may be circumstances in which false beliefs are more “fit” than true ones, and thus may be more likely to be selected for by natural selection.

Taken together, the Interface Theory of Perception and the Fitness-Beats-Truth Theorem suggest that our perceptions and beliefs may be shaped more by evolutionary pressures and survival needs than by objective reality. In this sense, they can be seen as a type of “simulation theory” that differs from the standard simulation hypothesis.

Rather than suggesting that our reality is a simulation in the traditional sense (on a computer created by an advanced civilization) the Interface Theory of Perception and the Fitness-Beats-Truth Theorem propose that our perceptions and beliefs are a type of simulation or simplified model of the world around us, shaped by evolutionary pressures.

Let’s explicate the structure and logic of such a perceptual apparatus by comparing it to the simulation mathematics we’ve already explored.

Perception is a simulation of reality

The simulation equation y(t+1) = f(x(t), u(t), θ) can be applied to the structure of perception as a simulation, where y(t+1) represents the perceptual experience at time t+1, x(t) represents the sensory input at time t, u(t) represents the attentional focus at time t, and θ represents the internal model of the world.

According to the Interface Theory of Perception, perception is not a direct reflection of an objective physical world but rather an evolved simulation, or interface, meant to guide behavior. The mind constructs a simplified and abstracted model of external reality (whatever it might ontologically be) based on sensory input and internal knowledge, and this model is used to generate perceptual experiences that are adaptive for survival and reproduction (Hoffman, Singh, & Prakash, 2015).

In this framework, the sensory input x(t) can be seen as the raw data that a conscious agent receives from the external world, such as light waves, sound waves, or chemical signals. The attentional focus u(t) can be seen as the selective filter that the agent’s cognition applies to the sensory input, based on current goals, interests, and expectations. In other words, that attentional focus is the function of relevance realization, as described by John Vervaeke (Vervaeke, 2018). The internal model θ can be seen as the prior knowledge that the conscious agent has about the structure and regularities of the external world, such as object persistence, gravity, or causality, all used to predict the next state of the world.

The function f represents the cognitive process that combines the sensory input x(t), the attentional focus of relevance realization u(t), and the internal model θ to generate the perceptual experience y(t+1). This process involves multiple levels of mental computation, including feature detection, object recognition, spatial mapping, temporal integration, and decision-making, and of course, relevance realization.

Hoffman’s Interface Theory of Perception emphasizes that perceptual experience is not a literal view of an objective physical reality, but rather a mental construction that is optimized for survival and reproduction. Spacetime and physicality are a simulated interface, existing as an epistemic entity, not as an ontic one.

A given conscious agent selects and simplifies the sensory information based on the data’s evolutionary value, and it ignores or distorts the information that is irrelevant or misleading (Hoffman, Singh, & Prakash, 2015).

Perceptual experience is therefore a product of the mind’s simulation of reality rather than a clear window onto reality itself. The physical world is thus akin to a Schopenhauerian representation of reality, as opposed to a literal presentation of the same (Schopenhauer, 1819).

The idea that the world of our perception is a simulation of a deeper reality is nothing new in human thought, and indeed dates back to at least the Pre-Socratics in the western canon. Our contemporary method of contextualizing the concept is to use technological and computational terminology, such as “simulation”. However, we shouldn’t let those catchy words fool us; the ideas themselves have been previously discussed in a multitude of ways, as each new thinker added to the complexity and precision of those ideas.

Now, it is our turn. How might our current day context add to that canon?

Reality simulates itself

Conscious agents and their perceptual apparati are part of reality. Therefore, to the extent that perception is a simulation of reality, it is also reality conducting a self-simulation.

Because reality is, by definition, all that exists, there is nothing outside of reality that is real enough to determine its existence. As such, reality is not just an object of observation, but rather, it is a self-contained, self-generating entity that simulates itself in the way we’ve already explicated.

Namely, reality is an information system (Wheeler, 1990) that generates its own reality through a recursive process of lowering entropy by giving form to its ground state of potential (Campbell, T., 2003). The etymology of “information”, after all, is to give form to potential, thereby reducing entropy (Wiener, 1965).

The equation for the mathematical structure of a simulation, as mentioned earlier, is:

y(t+1) = f(x(t), u(t), θ)

where y(t+1) is the output variable at time t+1, which is a function of the input variables x(t), u(t), and the system parameters θ. When the equation is applied to reality theory, the universe is the system being simulated, and the input variables x(t), u(t), and θ are the fundamental properties of the universe, such as matter, energy, and physical laws. The function f is the simulation algorithm that generates the output variable y(t+1), which represents the reality of the universe at time t+1.

Reality is a self-simulation because it is capable of generating both its own reality and its own representation thereof.

It does so by using the previous state of reality as a template for the next state of reality. In other words, the universe uses its own reality as input to generate the next state of reality, much the same way evolutionary processes work under universal Darwinism and emergent complexity theory in complexity science (Anderson, 1972; Campbell, D. T., 1974; Azarian, 2022).

Like evolutionary processes, the self-simulation of the universe is recursive, which means that it is a process that repeats itself indefinitely. The universe is a fractal system, which means that it is self-similar at different scales, akin to Douglas Hofstadter’s butterfly, a Gplot “showing energy bands for electrons in an idealized crystal in a magnetic field”, also called “a picture of God” (Hofstadter, 1979). In other words, the self-simulation of reality occurs at different levels of complexity, from subatomic particles to galaxies.

Hofstadter’s butterfly (Hofstadter, 1976).

Because of this recursion, the levels of reality display a structural isomorphism to each other, making reality intelligible from all levels, if not comprehensible. Each level entails an isomorphic syntax to all other levels, including to our perception, our cognition, and our natural and formal languages.

The “headset problem” for physicalism

What I’ll call the headset problem for physicalism is its narrow scope that limits itself to the physical world, or that which can be perceived through our senses (other measurement devices may detect physical phenomena that we can’t directly perceive, but we must still perceive our measurement devices). As we’ve seen, according to some theories, such as simulation theory, reality may be more than just the physical, and this poses a challenge for physicalism. If reality is a simulation, then it suggests that there may be a deeper reality beyond what we can perceive through our senses or detect through our instruments (Bostrom, 2003).

Under simulation theories, physical laws and constants, as well as the properties of matter and energy, are not fundamental but are part of the simulation. This implies that there may be a deeper reality beyond what we can observe or measure, which means that the physical world may be only a simulation of that deeper reality (Bostrom, 2003).

While we’ve already argued why the literal simulation theory is not a viable reality theory, we’ve also shown how reality can be considered a self-simulation. As well, perception has been shown to be a simulating function of that reality. Therefore, spacetime and physicality are not what we perceive, but rather how we perceive.

It then logically follows that the perceiver, consciousness, must precede physicality and spacetime.

This raises the question of whether physicalism can account for the full range of human experience and cognition. For example, if mental states and consciousness are not reducible to physical states and processes, but are instead fundamental, then physicalism will not be able to explain them. Hence, the hard problem of consciousness (Chalmers, 1995).

Moreover, because physicalism seeks to reduce consciousness to the physical, and since the physical is a perceptual interface (an epistemic entity, not an ontic one), physicalism will always entail an inherent dualism, even as it claims to be monist. Physical entities, as purely quantitative, and consciousness, as purely qualitative, will always need to be treated as separate under the theory that the physical brain generates consciousness. Thus, physicalism will never solve the hard problem of consciousness, as the explanatory gap is the result of logical incoherence and internal inconsistency at the core of physicalism’s central claims.

Physicalism will remain the study of the interface, and physicalists will be locked into the “headset”, able to inform us about only the simulation. That will still be useful for operating within the simulation, such as our progress in the natural sciences, but it will fail as an ontological project in search of a reality theory.

Indeed, idealism is the only viable metaphysics remaining, since it takes consciousness as the “substrate” of reality and treats the physical as an image of information within that fundamental consciousness. This description precisely maps onto the simulation structures we’ve explicated in this paper.

By discovering that reality is a self-simulating information system (that generates its own reality through a recursive process of lowering entropy, giving form to its ground state of potential), we also discover what reality is: consciousness. In essence, because the intelligibility of reality is only possible if the above arguments are valid (Santos, 2023), then we must either accept metaphysical idealism, or abandon intelligibility. The latter would require us to abandon science and philosophy altogether, and we’d also have no way to explain the successful survival of the biosphere. Therefore, we must accept intelligibility, and thus also idealism.

Bibliography

Azarian, B. (2022). The Romance of Reality: How the Universe Organizes Itself to Create Life, Consciousness, and Cosmic Complexity. Dallas, TX: BenBella Books.

Anderson, P. W. (1972). More is different. Science, 177(4047), 393-396.

Barros, F. G., & Verdejo, F. (2018). Modeling and simulation of complex systems. Cham, Switzerland: Springer.

Bostrom, N. (2003). Are you living in a computer simulation? Philosophical Quarterly, 53(211), 243-255.

Campbell, D. T. (1974). Evolutionary epistemology. In The philosophy of Karl Popper (pp. 413-463). Cambridge: Cambridge University Press.

Campbell, T. (2003). My Big TOE: The complete trilogy. Trafford Publishing.

Chalmers, D. (1995). Facing up to the problem of consciousness. Journal of Consciousness Studies, 2(3), 200-219.

Chalmers, D. (2019). Reality+. Oxford University Press.

Fishwick, P. A. (2018). Handbook of dynamic system modeling. Boca Raton, FL: CRC Press.

Golodoniuc, P., Gao, L., & Sorensen, K. (2021). The role of simulation in autonomous vehicle research and development. Transportation Research Part C: Emerging Technologies, 124, 103146.

Hoffman, D. D., Singh, M., & Prakash, C. (2015). The interface theory of perception. Psychonomic bulletin & review, 22(6), 1480-1506.

Hofstadter, D. R. (1976). “Energy levels and wavefunctions of Bloch electrons in rational and irrational magnetic fields”. Physical Review B. 14 (6): 2239–2249. Bibcode:1976PhRvB..14.2239H. doi:10.1103/PhysRevB.14.2239.

Hofstadter, D. R. (1979). Gödel, Escher, Bach: An eternal golden braid. New York, NY: Basic Books.

Kastrup, B. (2012). Meaning in absurdity: What bizarre phenomena can tell us about the nature of reality. Iff Books.

Kastrup, B. (2018). The idea of the world: A multi-disciplinary argument for the mental nature of reality. Iff Books.

Kelton, W. D., Sadowski, R. P., & Sadowski, D. A. (2018). Simulation with Arena (6th ed.). McGraw-Hill Education.

Kopper, R., Hauber, J., & Lemke, H. U. (2011). Recent advances in virtual reality: research challenges across disciplines. Virtual Reality, 15(1), 3-4.

Law, A. M., & Kelton, W. D. (2018). Simulation modeling and analysis. New York, NY: McGraw-Hill

Priest, G. (2006). Doubt truth to be a liar. Oxford University Press.

Rosenberg, A. (2006). Philosophy of science: A contemporary introduction (2nd ed.). Routledge.

Santos, M. (2023). Why Reality Must Be Intelligible: Language & Perception. BCP Journal, 14. Retrieved from https://michaelsantosauthor.com/bcpjournal/why-reality-must-be-intelligible-language-perception/

Scharp, K. (2018). The fitness-beats-truth theorem and the proper role of philosophical intuition. Synthese, 195(3), 1183-1203.

Schopenhauer, A. (1819). The World as Will and Representation.

Vervaeke, J. (2018). On the nature of relevance realization. Journal of Consciousness Studies, 25(5-6), 6-36.

Wheeler, J. A. (1990). Information, physics, quantum: The search for links. In W. Zurek (Ed.), Complexity, entropy, and the physics of information (pp. 3-28). Redwood City, CA: Addison-Wesley.

Wiener, N. (1965). Cybernetics: or Control and Communication in the Animal and the Machine. MIT Press.